Table of Contents

Overview

In order to start working with kafka, we need a cluster. The easiest way is to use docker. You can also download the binary files and run if you prefer. This is just one in many options

Docker-compose

networks:

kafka-net:

driver: bridge

services:

# --------------------------------------------------------------------------

# KAFKA BROKER (in KRaft mode)

# --------------------------------------------------------------------------

kafka:

# We use the Confluent Inc. image, which is popular and well-maintained.

image: confluentinc/cp-kafka:latest

container_name: kafka

networks:

- kafka-net

ports:

# Port for external connections to the Kafka broker from your host machine.

- "9092:9092"

environment:

# --- KRaft settings ---

# Specifies the roles of this node. In a single-node setup, it's both broker and controller.

KAFKA_PROCESS_ROLES: 'broker,controller'

# A unique ID for this node in the cluster.

KAFKA_NODE_ID: 1

# Specifies the controller nodes. In a single-node cluster, it's just this node.

# Format: <node_id>@<hostname>:<port>

KAFKA_CONTROLLER_QUORUM_VOTERS: '1@kafka:9093'

# A unique ID for the entire cluster. Generate one with: `docker run --rm confluentinc/cp-kafka kafka-storage random-uuid`

KAFKA_CLUSTER_ID: 'MkU3OEV5T0T6a7eSgBNB2w'

CLUSTER_ID: 'MkU3OEV5T0T6a7eSgBNB2w'

# --- Listener settings ---

# Defines how clients can connect. We define three listeners:

# - INTERNAL: For communication between services within the Docker network.

# - EXTERNAL: For communication from your host machine (e.g., your IDE).

# - CONTROLLER: For KRaft's internal protocol.

KAFKA_LISTENERS: 'INTERNAL://:29092,EXTERNAL://:9092,CONTROLLER://:9093'

# Maps listener names to security protocols.

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: 'INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT,CONTROLLER:PLAINTEXT'

# Specifies how clients (including other services) should connect to this broker.

# This is what the broker "advertises" to the outside world.

KAFKA_ADVERTISED_LISTENERS: 'INTERNAL://kafka:29092,EXTERNAL://localhost:9092'

# The listener used for communication between brokers. Not critical for a single node, but good practice.

KAFKA_INTER_BROKER_LISTENER_NAME: 'INTERNAL'

# The listener used by the controller nodes.

KAFKA_CONTROLLER_LISTENER_NAMES: 'CONTROLLER'

# --- General Kafka settings ---

# Automatically create topics if they don't exist when a producer writes to them. Good for development.

KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"

# Kafka Connect needs to store its configuration, offsets, and status. These topics are for that.

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_GROUP_INITIAL_REBALANCE_DELAY_MS: 0

KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 1

KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 1

# --------------------------------------------------------------------------

# KAFKA CONNECT

# --------------------------------------------------------------------------

kafka-connect:

image: confluentinc/cp-kafka-connect:latest

container_name: kafka-connect

networks:

- kafka-net

ports:

# Port for the Kafka Connect REST API.

- "8083:8083"

depends_on:

- kafka

environment:

# The address of the Kafka broker(s) for Connect to use. We use the internal listener.

CONNECT_BOOTSTRAP_SERVERS: 'kafka:29092'

# A unique ID for this Connect cluster.

CONNECT_GROUP_ID: 'connect-cluster'

# The topics where Connect will store its internal state.

CONNECT_CONFIG_STORAGE_TOPIC: 'connect-configs'

CONNECT_OFFSET_STORAGE_TOPIC: 'connect-offsets'

CONNECT_STATUS_STORAGE_TOPIC: 'connect-status'

# Replication factor for these internal topics. Must be 1 in a single-node setup.

CONNECT_CONFIG_STORAGE_REPLICATION_FACTOR: 1

CONNECT_OFFSET_STORAGE_REPLICATION_FACTOR: 1

CONNECT_STATUS_STORAGE_REPLICATION_FACTOR: 1

CONNECT_KEY_CONVERTER: 'org.apache.kafka.connect.json.JsonConverter'

CONNECT_VALUE_CONVERTER: 'org.apache.kafka.connect.json.JsonConverter'

CONNECT_INTERNAL_KEY_CONVERTER: 'org.apache.kafka.connect.json.JsonConverter'

CONNECT_INTERNAL_VALUE_CONVERTER: 'org.apache.kafka.connect.json.JsonConverter'

# The REST API listener for managing connectors.

CONNECT_REST_ADVERTISED_HOST_NAME: 'kafka-connect'

# Location where connector plugins are stored.

CONNECT_PLUGIN_PATH: '/usr/share/java,/usr/share/confluent-hub-components'

# --------------------------------------------------------------------------

# REDPANDA CONSOLE (Web UI)

# --------------------------------------------------------------------------

redpanda-console:

image: docker.redpanda.com/redpandadata/console:latest

container_name: redpanda-console

networks:

- kafka-net

ports:

# Port to access the web UI from your browser.

- "8080:8080"

depends_on:

- kafka

- kafka-connect

environment:

# The address of the Kafka broker(s) for the console to connect to.

KAFKA_BROKERS: "kafka:29092"

# The address of the Kafka Connect cluster for the console to manage.

KAFKA_CONNECT_ENABLED: "true"

KAFKA_CONNECT_CLUSTERS_NAME: "connect-cluster"

KAFKA_CONNECT_CLUSTERS_URL: "http://kafka-connect:8083"

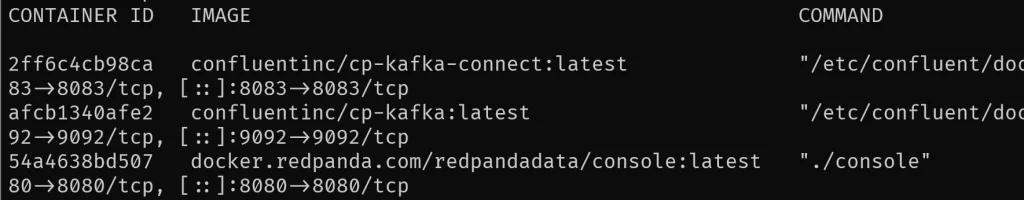

Here I have a simple setup with some extra services (kafka-connect) and redpanda (for a nice UI).

Simply run docker-compose up and you should have a cluster up and running (with one broker)

If you go to localhost:8080, you can see the cluster’s overview, thanks to redpanda

I build softwares that solve problems. I also love writing/documenting things I learn/want to learn.